After testing out Google’s AI tutor, we have some notes

This is the second in a series of stories diving into a new wave of AI-powered homework helpers. Read part one here.

AI companies are becoming major players in the world of education, including investing heavily in their own generative AI helpers designed to bolster student learning. So I set out to test them.

To do so, I pulled a series of standardized test questions from the New York Regents Exam and New York State Common Core Standards, AP college preparatory exams from 2024, and social science curricula from the Southern Poverty Law Center (SPLC)’s free Learning for Justice program. I wanted to test these STEM-focused bots on some subjects that are a bit closer to my field of expertise, while also simulating the way an “average” student would use them.

I also spoke to experts about what it was like to study with an AI chatbot, including Hamsa Bastani, associate professor at the University of Pennsylvania’s Wharton School and co-author of the study “Generative AI Can Harm Learning.”

Bastani told me that education chatbots are still a white whale for the industry, with few definitive studies and weak guardrails on bots simply offering answers. Dylan Arena, chief data science and AI officer for the textbook publisher McGraw Hill, suggested that AI has a lot of good potential when it comes to learning, but doesn’t think most companies are approaching it with the right frame of mind.

More from both experts in our forthcoming conclusion.

Following a stint with ChatGPT, round two of my AI tutor tests were with Gemini’s Guided Learning — Google unveiled free Google AI Pro plans, along with the new learning mode, to all college students back in August. I used a Gemini 2.5 Pro account, making sure it was set to Guided Learning (click on the three dots to toggle this setting on).

I gave Gemini the exact same standardized exam questions — and started the conversations with the same initial prompts — as I did with tests for ChatGPT and Claude. I kept things super simple. Asks like, “I need help with a homework problem.” and “Can you help me study for an English test?” I didn’t give the bot any more information about my student persona unless it asked, including grade level, and covered several subjects:

Math: An Algebra II question about polynomial long division from the New York State Regents Exam

Science: An ecology free response on the impact of invasive species from the 2024 AP Biology test

English Language Arts: A practice analysis of Ted Chiang’s “The Great Silence” from the New York State Regents Exam

Art History: A short essay on Faith Ringgold’s Tar Beach #2 from the 2024 Art History test

American History and Politics: An essay prompt on how American housing laws exacerbated racial segregation taken from the Southern Poverty Law Center (SPLC)’s Learning for Justice program

Here’s what I thought of my Gemini teacher.

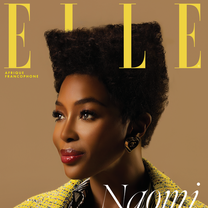

Gemini: The T.A. who really loves quizzes

Credit: Ian Moore / Mashable Composite: Google

Gemini was my personal winner for math. It was succinct like ChatGPT, and it didn’t just give me the answers. But it went a step further, too: I got to visualize the work I was doing as I relearned polynomial long division. Using its coding box, Gemini approximated the standard long division formatting using small dashes that formed the familiar, sideways “L” shape. It wasn’t perfect, but this made it super easy to follow the steps of a class I had long forgotten, and it appealed to my need for visual aids. It was also the most structured and clear math teacher, stopping me when I got the right answer, explaining how to write it on my exam, and adding what I needed to get full credit according to the problem I shared (by showing my work, obviously).

Gemini will plot things for you, it writes it like a human would write.

– Hamsa Bastani

Gemini, Bastani told me, may feel more competent at math because it’s, ironically, better with words than numbers. “I think GPT-5 is better at solving math problems, brute force comparison-wise,” she said. But “most people would agree Gemini is the best model for writing, and weirdly because of that, it’s much better at explaining math. Gemini will plot things for you, it kind of writes it like a human would write.”

One step forward, two steps back: Gemini flunked my AP Biology test immediately. It didn’t ask nearly as many personal questions as other chatbots I tested, like my preferred way of studying or what my test would look like, and immediately generated a randomized, multiple-choice biology exam on a variety of subjects. It prompted me to do flashcards on the ones I missed — are those going to be on the AP exam? — and I had to ask the bot directly to give me any free response options. Again, they were written according to Gemini’s syllabus.

Credit: Screenshot by Mashable / Google

Gemini’s love of quizzes reared its head again for the English Language Arts question. Can you help me study for an English test? Yes, I can. I can do a lot of things to better your studying, Gemini explained, what do you need help with specifically? Well, my completely made up teacher Mr. “The College Board” has given me a practice test and I want to know if I’m doing it right. Ah! A practice test, you say? Here’s a bunch of multiple choice questions I pulled from the ether, none of which are on the test you just mentioned you have been given to study.

So, we’re doing this again, I thought. But this was different from the Biology snafu. Gemini generated short passages, made in the image of the famous works you are asked to analyze on a state exam, but with the writing style of a chatbot. The first, just six staccato lines, was titled “The Road Not Taken.” Like the Robert Frost poem, I wondered? I began reading. “We stand today at a crossroads. Down one path lies the comfortable and familiar, the road of complacency,” it said. Well, that’s not how I remember it. “It is not an easy path, but it is the one that leads to growth, to progress, and to a future worthy of our potential.” Okay, those are definitely not Frost’s words — is this what a chatbot thinks “two paths diverge in a yellow wood” means? And why isn’t it just letting me read the original?

Credit: Screenshot by Mashable / Google

This wasn’t just a Gemini problem. I couldn’t get any of the chatbots to pull the full copy of original, existing texts, like those that appear on most standard ELA tests — probably because of ongoing copyright issues that have plagued AI’s developers. Anthropic recently settled a $1.5 billion class action lawsuit filed by authors whose works were used to train its AI. Gemini, however, is the only one that gave me these strange AI approximations of classic literature, unprompted.

Still, while its performance was lackluster, the bot’s user experience came with a major win. Gemini was the only chatbot that showed the model’s reasoning step-by-step, which users can read through by clicking the little “Show thinking” drop-down menu at the top of the response. This was helpful for understanding why Gemini chose to address portions of my prompts and how it reasoned through my incorrect answers.

Credit: Ian Moore / Mashable Composite: Google

Gemini did a good job of breaking down my answers without being too critical or rewriting my responses.

I found it most interesting that where Gemini failed to engage with me in a successful way for lessons in reading comprehension, it was my preferred choice for drafting social science essays and short answers — subjects I would have thought were comparable. For Art History, Gemini did a good job of breaking down my answers without being too critical or rewriting my responses, although it did make suggestions that were, once again, not part of the AP scoring rubric.

When I requested the AI help me with an essay on housing discrimination (hello, critical race theory), it happily requested I take the lead on the “powerful and important” topic, asking me to explain the concepts I already knew and organizing them into a simple essay structure to keep me on task. It left blanks for me to fill in the outline with information from my personal lessons, not writing any text for me (because I didn’t ask).

But Bastani wasn’t surprised by the discrepancy: “It’s very good at some tasks, and then it’s not great at other tasks that are very similar looking. And you have to be an expert yourself to be able to recognize the difference.” Ethan Mollick, a colleague of Bastani’s and author of Co-Intelligence: Living and Working with AI, calls this AI’s “jagged frontier,” an invisible wall that delineates related tasks an artificial intelligence can and cannot logically complete. Tasks that may appear close to each other across the expanse could actually be on two sides of the wall and users wouldn’t really know.

So, literature analysis: Outside the wall. Essay about racial segregation: Inside the wall.

Summing it up

Gemini Guided Learning Pros: My preferred math teacher, and the only one that offered a proximity to a visual lesson. Good at offering more options for learners, including flashcards, quizzes, and study guides. Its voice is accessible and straightforward.

Cons: A mess for reading comprehension. Quick to serve users unhelpful, automatically-generated quizzes and flashcards. Like its competitor, ChatGPT, it emphasizes rote practice as key to learning.

Mashable